Dear friends,

As we approach the end of the year, many of us consider setting goals for next year. I wrote about setting learning goals in a previous letter. In this one, I’d like to share a framework that I’ve found useful: process goals versus outcome goals.

When people think about setting goals, most gravitate toward outcome goals. But they have a downside: They’re often not fully within your control, and setbacks due to bad luck can be demoralizing. In contrast, process goals are more fully within your control and can lead more reliably toward the outcome you want.

Learning is a lifelong process. Though it can have a profound impact, often it takes time to get there. Thus, when it comes to learning, I usually set process goals in addition to outcome goals. Process goals for learning can help you keep improving day after day and week after week, which will serve you better than a burst of activity in which you try to cram everything you need to know.

Keep learning!

Ring in the NewWe leave behind a year in which AI showed notable progress in research as well as growing momentum in areas such as healthcare, logistics, and manufacturing. Yet it also showed its power to do harm, notably its ability to perpetuate bias and spread misinformation. We reviewed these events in our winter holiday and Halloween special issues. The coming year holds great potential to bring AI’s benefits to more people while ameliorating flaws that can lead to bad outcomes. In this issue, AI leaders from academia and industry share their highest hopes for 2022.

Abeba Birhane:Clean Up Web DatasetsFrom language to vision models, deep neural networks are marked by improved performance, higher efficiency, and better generalizations. Yet, these systems are also marked by perpetuation of bias and injustice, inaccurate and stereotypical representation of groups, lack of explainability and brittleness. I am optimistic that we will move slowly and build more equitable AI, thanks to critical scholars who have been calling for caution and foresight. I hope we can adopt measures that mitigate these impacts as a routine part of building and deploying AI models. The field does not lack optimism. In fact, everywhere you look, you find overenthusiasm, overpromising, overselling, and exaggeration of what AI models are capable of doing. Mainstream media outlets aren’t the only parties guilty of making unsustainable claims, overselling capabilities, and using misleading language; AI researchers themselves do it, too. Language models, for example, are given human-like attributes such as “awareness” and “understanding” of language, when in fact models that generate text simply predict the next word in a sequence based on the previous words, with no understanding of underlying meaning. We won't be able to foresee the impact our models have on the lives of real people if we don't see the models themselves clearly. Acknowledging their limitations is the first step toward addressing the potential harms they are likely to cause. What is more concerning is the disregard towards work that examines datasets. As models get bigger and bigger, so do datasets. Models with a trillion parameters require massive training and testing datasets, often sourced from the web. Without the active work of auditing, carefully curating, and improving such datasets, data sourced from the web is like a toxic waste. Web-sourced data plays a critical role in the success of models, yet critical examination of large-scale datasets is underfunded, and underappreciated. Past work highlighting such issues is marginalized and undervalued. Scholars such as Deborah Raji, Timnit Gebru, and Joy Buolamwini have been at the forefront of doing the dirty and tiresome work and cleaning up the mess. Their insights should be applied at the core of model development. Otherwise, we stand to build models that reflect the lowest common denominators of human expression: cruelty, bigotry, hostility, and deceit. My own work has highlighted troubling content — from misogynistic and racial slurs to malignant stereotypical representations of groups — found in large-scale image datasets such as TinyImages and ImageNet. One of the most distressing things I have ever had to do as a researcher was to sift through LAION-400M, the largest open-access multimodal dataset to date. Each time I queried the dataset with a term that was remotely related to Black women, it produced explicit and dehumanizing images from pornographic websites. Such work needs appropriate allocations of time, talent, funding and resources. Moreover, it requires support for the people who must do this work. It causes deep, draining emotional and psychological trauma. The researchers who do this work — especially people of color who are often in precarious positions — deserve pay commensurate to their contribution as well as access to counseling to help them cope with the experience of sifting through what can be horrifying, degrading material. The nascent work in this area so far — and the acknowledgement, however limited, that it has received — fills me with hope in the coming year. Instead of blind faith in models and overoptimism about AI, let’s pause and appreciate the people who are doing the dirty background work to make datasets, and therefore models, more accurate, just, and equitable. Then, let's move forward — with due caution — toward a future in which the technology we build serves the people who suffer disproportionately negative impacts; in the word of Pratyusha Kalluri, towards technology that shifts power from the most to the least powerful. My highest hope for AI in 2022 is that this difficult and valuable work — and those who do such work, especially Black women — will become part and parcel of mainstream AI research. These scholars are inspiring the next generation of responsible and equitable AI. Their work is reason not for defeatism or skepticism but for hope and cautious optimism. Abeba Birhane is a cognitive science PhD researcher at the Complex Software Lab in the school of computer science at University College Dublin.

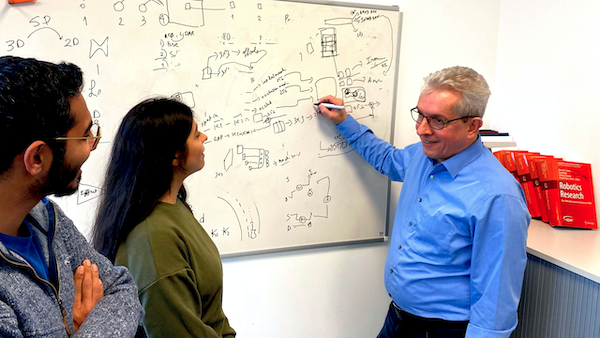

Wolfram Burgard:Train Robots in the Real WorldRobots are tremendously useful machines, and I would like to see them applied to every task where they can do some good. Yet we don’t have enough programmers for all this hardware and all these tasks. To be useful, robots need to be intelligent enough to learn from experience in the real world and communicate what they’ve learned for the benefit of other robots. I hope that the coming year will see great progress in this area. Unlike many typical machine learning systems, robots need to be highly reliable. If you’re using a face detection system to find pictures of friends in an image library, it’s not much of a problem if the system fails to find a particular face or finds an incorrect one. But mistakes can be very costly when physical systems interact with the real world. Consider a warehouse robot that surveys shelves full of items, identifies the ones that a customer has paid for, grasps them, and puts them in a box. (Never mind an autonomous car that could cause a crash if it makes a mistake!) Whether this robot classifies objects accurately isn’t a matter of life and death, but even if its classification accuracy is 99.9 percent, one in 1,000 customers will receive the wrong item. After decades of programming robots to act according to rules tailored for specific situations, roboticists now embrace machine learning as the most promising path to building machines that achieve human performance in tasks like the warehouse robot’s pick-and-place. Deep learning provides excellent visual perception including object recognition and semantic segmentation. Meanwhile, reinforcement learning offers a way to learn virtually any task. Together, these techniques offer the most promising path to harnessing robots everywhere they would be useful in the real world. What’s missing from this recipe? The real world itself. We train visual systems on standardized datasets, and we train robot behaviors in simulated environments. Even when we don’t use simulations, we keep robots cooped up in labs. There are good reasons to do this: Benchmark datasets represent a useful data distribution for training and testing on particular tasks, simulations allow dirt-cheap virtual robots to undertake immense numbers of learning trials in relatively little time, and keeping robots in the lab protects them — and nearby humans — from potentially costly damage. But it is becoming clear that neither datasets nor simulations are sufficient. Benchmark tasks are more tightly defined than many real-world applications, and simulations and labs are far simpler than real-world environments. Progress will come more rapidly as we get better at training physical robots in the real world. To do this, we can’t treat robots as solitary learners that bumble their way through novel situations one at a time. They need to be part of a class, so they can inform one another. This fleet-learning concept can unite thousands of robots, all learning on their own and from one another by sharing their perceptions, actions, and outcomes. We don’t yet know how to accomplish this, but important work in lifelong learning and incremental learning provides a foundation for robots to gain real-word experience quickly and cost-effectively. Then they can sharpen their knowledge in simulations and take what they learn in simulations back to the real world in a loop that takes advantage of the strengths of each environment. In the coming year, I hope that roboticists will shut down their sims, buy physical robots, take them out of the lab, and start training them on practical tasks in real-world settings. Let’s try this for a year and see how far we get! Wolfram Burgard is a professor at the University of Freiburg, where he heads the Autonomous Intelligent Systems research lab.

Alexei Efros:Learning From the Ground UpThings are really starting to get going in the field of AI. After many years (decades?!) of focusing on algorithms, the AI community is finally ready to accept the central role of data and the high-capacity models that are capable of taking advantage of this data. But when people talk about “AI,” they often mean very different things, from practical applications (such as self-driving cars, medical image analysis, robo-lawyers, image/video editing) to models of human cognition and consciousness. Therefore, it might be useful to distinguish two broad types of AI efforts: semantic or top-down AI versus ecological or bottom-up AI. Alexei Efros is a professor of computer science at UC Berkeley.

Chip Huyen:AI That Adapts to Changing ConditionsUntil recently, big data processing has been dominated by batch systems like MapReduce and Spark, which allow us to periodically process a large amount of data very efficiently. As a result, most of today’s machine learning workload is done in batches. For example, a model might generate predictions once a day and be updated with new training data once a month. Chip Huyen works on a startup that helps companies move toward real-time machine learning. She teaches Machine Learning Systems Design at Stanford University.

Yoav Shoham:Language Models That ReasonI believe that natural language processing in 2022 will re-embrace symbolic reasoning, harmonizing it with the statistical operation of modern neural networks. Let me explain what I mean by this. Yoav Shoham is a co-founder of AI21 Labs and professor emeritus of computer science at Stanford University.

Yale Song:Foundation Models for VisionLarge models pretrained on immense quantities of text have been proven to provide strong foundations for solving specialized language tasks. My biggest hope for AI in 2022 is to see the same thing happen in computer vision: foundation models pretrained on exabytes of unlabeled video. Such models, after fine-tuning, are likely to achieve strong performance and provide label efficiency and robustness for a wide range of vision problems. Learning from large amounts of unlabeled video poses unique challenges that must be addressed by both fundamental AI research and strong engineering efforts:

These are exciting research and engineering challenges for which I hope to see significant advances in 2022. Yale Song is a researcher at Microsoft Research in Redmond, where he works on large-scale problems in computer vision and artificial intelligence.

Matt Zeiler:Advance AI for GoodThere’s a reason why artificial intelligence is sometimes referred to as “software 2.0”: It represents the most significant technological advance in decades. Like any groundbreaking invention, it raises concerns about the future, and much of the media focus is on the threats it brings. And yet, at no point in human history has a single technology offered so many potential benefits to humanity. AI is a tool whose goodness depends on how we use it.

Subscribe and view previous issues here.

Thoughts, suggestions, feedback? Please send to thebatch@deeplearning.ai. Avoid our newsletter ending up in your spam folder by adding our email address to your contacts list.

|