Dear friends,

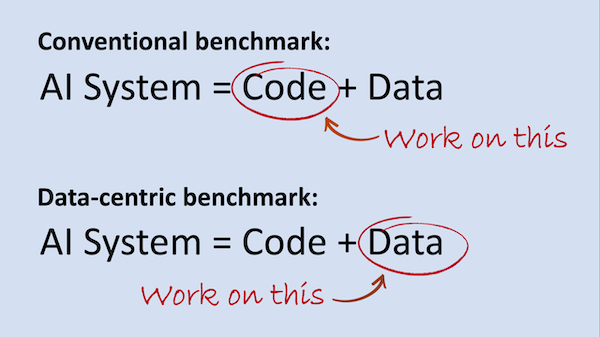

Benchmarks have been a significant driver of research progress in machine learning. But they've driven progress in model architecture, not approaches to building datasets, which can have a large impact on performance in practical applications. Could a new type of benchmark spur progress in data-centric AI development?

When AI was shifting toward deep learning over a decade ago, I didn’t foresee how many thousands of innovations and research papers would be needed to flesh out core tenets of the field. But now I think an equally large amount of work lies ahead to support a data-centric approach. For example, we need to develop good ways to:

Benchmarks and competitions in which teams are asked to improve the data rather than the code would better reflect the workloads of many practical applications. I hope that such benchmarks also will spur research and help engineers gain experience working on data. The Human Computer Interface (HCI) community also has a role in designing user interfaces that help developers and subject-matter experts work efficiently with data.

I asked for feedback on the idea of a data-centric competition on social media (Twitter, LinkedIn, Facebook). I’ve read all the responses so far — thanks to all who replied. If you have thoughts on this, please join the discussion there.

Keep learning! Andrew News

Face Recognition for the MassesA secretive start-up matches faces online as a free service. What’s new: Face recognition tech tends to be marketed to government agencies, but PimEyes offers a web app that lets anyone scan the internet for photos of themself — or anyone they have a picture of. The company says it aims to help people control their online presence and fight identity theft, but privacy advocates are concerned that the tool could be used to monitor or harass people, The Washington Post reported. You can try it here. How it works: PimEyes has extracted geometric data from over 900 million faces it has found online. It claims not to crawl social media sites, but images from Instagram, Twitter, and YouTube have shown up in its results.

Behind the news: Free online face matching is part of a broader mainstreaming of face recognition and tools to counter it.

Why it matters: The widespread ability to find matches for any face online erodes personal privacy. It also adds fuel to efforts to regulate face recognition, which could result in restrictions that block productive uses of the technology. We’re thinking: We’re all poorer when merely posting a photo on a social network puts privacy at risk. The fact that such a service is possible doesn’t make it a worthy use of an engineer’s time and expertise.

Double Check for DefamationA libel-detection system could help news outlets and social media companies stay out of legal hot water. What’s new: CaliberAI, an Irish startup, scans text for statements that could be considered defamatory, Wired reported. You can try it here. How it works: The system uses custom models to assess whether assertions that a person or group did something illegal, immoral, or otherwise taboo meet the legal definition of defamation.

Behind the news: News organizations are finding diverse uses for natural language processing.

Why it matters: A defamation warning system could help news organizations avoid expensive, time-consuming lawsuits. That’s especially important in Europe and other places where such suits are easier to file than in the U.S. Social media networks may soon need similar tools. Proposed rules in the EU and UK would hold such companies legally accountable for defamatory or harmful material published on their platforms. U.S. lawmakers are eyeing similar legislation. We’re thinking: Defamation detection may be a double-edged sword. While it has clear benefits, it could also have a chilling effect on journalists, bloggers, and other writers by making them wary of writing anything critical of anyone.

A MESSAGE FROM DEEPLEARNING.AI

The first two courses in our Machine Learning Engineering for Production (MLOps) Specialization are live on Coursera! Enroll now

Bias By the BookResearchers found serious flaws in an influential language dataset, highlighting the need for better documentation of data used in machine learning. What’s new: Northwestern University researchers Jack Bandy and Nicholas Vincent investigated BookCorpus, which has been used to train at least 30 large language models. They found several ways it could impart social biases. What they found: The researchers highlighted shortcomings that undermine the dataset’s usefulness.

Behind the news: The study’s authors were inspired by previous work by researchers Emily Bender and Timnit Gebru, who proposed a standardized method for reporting how and why datasets are designed. The pair outlined in a later paper how lack of information about what goes into datasets can lead to “documentation debt,” costs incurred when data issues lead to problems in a model’s output. Why it matters: Skewed training data can have substantial effects on a model’s output. Thorough documentation can warn engineers of limitations and nudge researchers to build better datasets — and maybe even prevent unforeseen copyright violations. We’re thinking: If you train an AI model on a library full of books and find it biased, you have only your shelf to blame.

What Machines Want to SeeResearchers typically downsize images for vision networks to accommodate limited memory and accelerate processing. A new method not only compresses images but yields better classification. What’s new: Hossein Talebi and Peyman Milanfar at Google built a learned image preprocessor that improved the accuracy of image recognition models trained on its output. Key insight: Common approaches to downsizing, such as bilinear and bicubic methods, interpolate between pixels to determine the colors of pixels in a smaller version of an image. Information is lost in the process, which may degrade the performance of models trained on them. One solution is to train separate models that perform resizing and classification together. How it works: The network comprises a bilinear resizer layer sandwiched between convolutional layers to enable it to accept any input image size.

Results: The authors’ approach achieved top-5 error on ImageNet of 10.8 percent. The baseline model achieved 12.8 percent. Yes, but: The proposed method consumed 35 percent more processing power (7.65 billion FLOPS) than the baseline (5.67 billion FLOPS). Why it matters: Machine learning engineers have adopted conventional resizing methods without considering their impact on performance. If we must discard information, we can devise an algorithm that learns to keep what’s the most important. We’re thinking: In between training vision networks, you might use this image processor to produce mildly interesting digital art.

Work With Andrew Ng

Head of Applied Science: Workera is looking for a head of applied science to make our data valid and reliable and leverage it to create algorithms that solve novel problems in talent and learning. You will own our data science and machine learning practice and work on challenging and exciting problems including personalized learning and computerized adaptive testing. Apply here

Head of Engineering: Workera is looking for an engineering leader to manage and grow our world-class team and unlock its potential. You will lead engineering execution and delivery, make Workera a rewarding workplace for engineers, and participate in company oversight. Apply here

Company Builder (U.S.): AI Fund is looking for a senior associate or principal-level candidate to join our investment team to help us build and incubate ideas that are generated internally. This position will work closely with Andrew on a variety of AI problems. Strong business acumen and market research capabilities are more important than technical background. Apply here

Subscribe and view previous issues here.

Thoughts, suggestions, feedback? Please send to thebatch@deeplearning.ai. Avoid our newsletter ending up in your spam folder by adding our email address to your contacts list.

|