|

Dear friends,

Nearly a decade ago, I got excited by self-taught learning and unsupervised feature learning — ways to learn features from unlabeled data that afterward can be used in a supervised task. These ideas contributed only marginally to practical performance back then, but I’m pleased to see their resurgence and real traction in self-supervised learning.

Many of you know the story of how the increasing scale of computation and data, coupled with innovation in algorithms, drove the rise of deep learning. Recent progress in self-supervised learning also appears to be powered by greater computational and data scale — we can now train large neural networks on much larger unlabeled datasets — together with new algorithms like contrastive predictive coding.

Today feels very much like the early, heady days a decade-plus ago, when we saw neural networks start to work in practical settings. The number of exciting research directions seems larger than ever!

Keep learning,

Andrew

News

Glimpse My Ride

Police in the U.S. routinely use AI to track cars with little accountability to the public.

What happened: Documents obtained by Wired revealed just how intensively police in Los Angeles, California, have been using automatic license plate readers. Officials queried databases of captured plate numbers hundreds of thousands of times in 2016 alone, records show.

How it works: The Los Angeles Police Department, county sheriff, and other local agencies rely on TBird, a license plate tracking system from data-mining company Palantir.

- Detectives can search for full or partial numbers. The system maps the locations of vehicles with matching plates, annotated with previous searches and the time each image was captured.

- The system acts as a virtual dragnet, alerting nearby officers whenever cameras spot a flagged plate.

- It also lists all plates that appeared in the vicinity of a crime, along with each vehicle’s color, make, and style, thanks to machine vision from Intrinsics.

- The LAPD shares its license plate records with those of other nearby police departments as well as private cameras located in malls, universities, transit centers, and airports.

Behind the news: A 2013 survey by the U.S. Dept. of Justice found that many urban police departments use automatic license plate readers.The LAPD was among the first to do so starting in 2009.

Why it matters: License plate readers help solve serious crimes. Wired describes a case in which the LAPD used TBird to search for vehicles spotted near the place where a murdered gang member’s body was found. The plates led them to a rival gang member who eventually was convicted for the homicide.

We’re thinking: Digital tools are becoming important in fighting crime, but it shouldn’t take a reporter’s public records request to find out how police are using them. We support regulations that require public agencies to disclose their use of surveillance technology, as well as rigorous logging and auditing to prevent misuse.

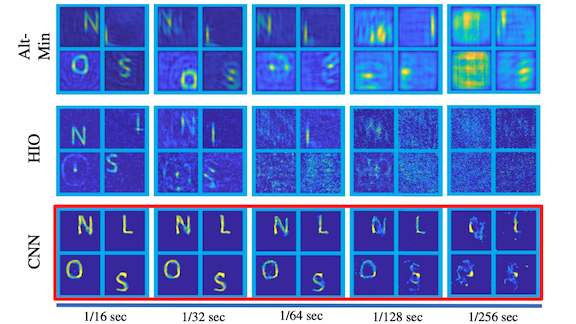

Periscope Vision

Wouldn’t it be great to see around corners? Deep learning researchers are working on it.

What’s new: Stanford researcher Christopher Metzler and colleagues at Princeton, Southern Methodist University, and Rice University developed deep-inverse correlography, a technique that interprets reflected light to reveal objects outside the line of sight. The technique can capture submillimeter details from one meter away, making it possible to, say, read a license plate around a corner.

Key insight: Light bouncing off objects retains information about their shape even as it ricochets off walls. The researchers modeled the distortions likely to occur under such conditions and generated a dataset accordingly, enabling them to train a neural network to extract images of objects from their diminished, diffuse, noisy reflections.

How it works: The experimental setup included an off-the-shelf laser and camera, a rough wall (called a virtual detector), and a U-Net convolutional neural network trained to reconstruct an image from its reflections.

- To train the U-Net, the researchers generated over 1 million images in pairs, one a simple curve (the team deemed natural images infeasible), the other a simulation of the corresponding reflections.

- The researchers shined the laser at a wall. Bouncing off the wall, the light struck an object around the corner. The light caromed off the object, back to the wall, and into the camera.

- The U-Net accepted the camera’s output and disentangled interference patterns in the light waves to reconstruct an image.

Results: The researchers tested the system by spying hidden letters and numbers 1 centimeter tall. Given the current state of non-line-of-sight vision, quantitative results weren’t practical since the camera inevitably fails to capture an unknown amount of detail). Qualitatively, however, the researchers deemed their system’s output a substantial improvement over the previous state of the art. Moreover, the U-Net is thousands of times faster.

Yes, but: Having been trained on simple generated images, the system perceived only simple outlines. Moreover, the simulation on which the model was trained may not correspond to real-world situations closely enough to be generally useful.

Why it matters: Researchers saw around corners.

We’re thinking: The current implementation likely is far from practical applications. But it is a reminder that AI can tease out information from subtle cues that are imperceptible to humans. Here's another example.

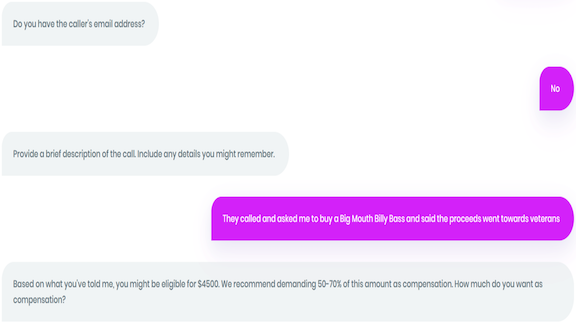

Robocallers vs Robolawyer

A digital attorney is helping consumers take telemarketers and phone scammers to court.

What’s new: DoNotPay makes an app billed as the world’s first robot lawyer. Its latest offering, Robo Revenge, automates the process of suing intrusive robocallers.

How it works: U.S. law entitles consumers who have added their phone number to the National Do Not Call Registry to sue violators for $3,000 on average. For $3 a month, Robo Revenge makes it easy:

- The system generates a special credit card number that users can give to spam callers. When a telemarketer processes the card, it uses the transaction information to determine the company’s legal identity.

- After the call, users can open a chat session to enter information they’ve gathered.

- Then the system draws on the chat log, transaction data, and local, state, and federal laws to file a lawsuit automatically.

Behind the news: Joshua Browder founded DoNotPay in 2016 to help people fight parking tickets. Since then, he has added tools that cancel unwanted subscriptions, navigate customer service labyrinths, and sue airlines for cancelled flights. Browder in 2018 told Vice that DoNotPay wins about 50 percent of cases, earning clients $7,000 per successful lawsuit on average.

Why it matters: The average American receives 18 telemarketing calls a month — even though the Do Not Call Registry contains 240 million numbers, enough to cover around 70 percent of the U.S. population. Spam callers might not be so aggressive if their marks were likely to sue.

We’re thinking: We're not fans of making society even more litigious. But we could be persuaded to make an exception for scofflaw telespammers.

A MESSAGE FROM DEEPLEARNING.AI

-2.gif?upscale=true&name=ezgif.com-video-to-gif%20(1)-2.gif)

Explore federated learning and how you can retrain deployed models while maintaining user privacy. Take the new course on advanced deployment scenarios in TensorFlow. Enroll now

.gif?upscale=true&name=Godfathers%20(1).gif) Meeting of the Minds Meeting of the Minds

Geoffrey Hinton, Yoshua Bengio, and Yann LeCun presented their latest thinking about deep learning’s limitations and how to overcome them.

What’s new: The deep-learning pioneers discussed how to improve machine learning, perception, and reasoning at the Association for the Advancement of Artificial Intelligence conference in New York.

What they said: Deep learning needs better ways to understand the world, they said. Each is working toward that goal from a different angle:

- Bengio, a professor at the Université de Montréal, observed that deep learning’s results are analogous to the type of human thought — which cognitive scientists call system 1 thinking — that is habitual and occurs below the surface of consciousness. He aims to develop systems capable of the more attentive system 2 thinking that enables people to respond effectively to novel situations. He described a consciousness prior, made up of high-level variables with a high probability of being true, that would enable AI agents to track abstract changes in the world. That way, understanding, say, whether a person is wearing glasses would be a matter of one bit rather than many pixels.

- Hinton, who divides his time between Google Brain and the University of Toronto, began by noting the limitations of convolutional neural networks when it comes to understanding three-dimensional objects. The latest version of his stacked-capsule autoencoder is designed to overcome that issue. The model learns to represent objects independently of their orientation in space, so it can recognize objects despite variations in point of view, lighting, or noise.

- Facebook AI chief LeCun noted that, while supervised learning has accomplished amazing things, it requires tremendous amounts of labeled data. Self-supervised learning, in which a network learns by filling in blanks in input data, opens new vistas. This technique has had great success in language models, but it has yet to work well in visual tasks. To bridge the gap, LeCun is betting on energy-based models that measure compatibility between an observation (such as a segment of video) and the desired prediction (such as the next frame).

Behind the news: The Association for Computing Machinery awarded Bengio, Hinton, and LeCun the 2018 A. M. Turing Award for their work. The association credits the trio’s accomplishments, including breakthroughs in backpropagation, computer vision, and natural language processing, with reinvigorating AI.

Words of wisdom: Asked by the moderator what students of machine learning should read, Hinton offered the counterintuitive observation that “reading rots the mind.” He recommended that practitioners figure out how they would solve a given problem, and only then read about how others solved it.

We’re thinking: We apologize for rotting your mind.

Trading Faces

AI’s ability to transfer a person’s face from a source photo onto someone in a target photo doesn’t work so well when the target face is partially obscured by, say, eyeglasses, a veil, or a hand. A new technique handles such occlusions.

What’s new: Lingzhi Li at Peking University and collaborators at Microsoft Research propose FaceShifter, a face-swapping system that reproduces accurately both a source face and elements that block the target.

Key insight: It’s easier to reproduce occlusions if you’ve scoped them out ahead of time. FaceShifter takes an extra step to identify occlusions before it renders the final image.

How it works: The system has two major components. Adaptive Embedding Integration Network (AEI-Net) spots occlusions and generates a preliminary swap. Heuristic Error Acknowledging Refinement Network (HEAR-Net) then refines the swap.

- AEI-Net identifies troublesome occlusions by using the target image as both source and target. The difference between its input and output highlights any occlusions it failed to reproduce.

- AEI-Net extracts face features from a source image. It learns to extract non-face features from the target image in multiple resolutions, so it can capture larger and smaller shapes.

- AEI-Net’s generator combines these features into an intermediate representation, using attention to focus on the most relevant features.

- HEAR-Net uses the occlusion-only and intermediate images to generate a final image. It’s trained to strike a balance between maintaining the source face’s distinctiveness, minimizing changes in AEI-Net’s output, and reproducing images accurately when the source and target are the same.

Results: The researchers used a pretrained face classifier to evaluate how well FaceShifter maintained the distinctiveness of the source face compared with DeepFakes and FaceSwap. FaceShifter achieved 97.38 percent accuracy versus 82.41 percent, the next-best score. It also outscored the other models in human evaluations of realism, identity, and attributes like pose, face expression, lighting.

Why it matters: FaceShifter introduces a novel method to check its own work. Although the researchers focused on swapping faces, a similar approach could be used to tackle challenges like combating adversarial examples.

We’re thinking: Better face swapping one day could transform entertainment by, say, grafting new stars — or even audience members — into movies. But it’s also potentially another tool in the arsenal of deepfakers who aim to deceive.

Business Pushes the Envelope

The business world continues to shape deep learning’s future.

What’s new: Commerce is pushing AI toward more efficient consumption of data, energy, and labor, according to a report on trends in machine learning from market analyst CB Insights.

What they think: The report draws on a variety of sources including records of mergers and acquisitions, investment tallies, and patent filings. Among its conclusions:

- Consumers are increasingly concerned about data security. One solution may be federated learning, the report says. Tencent’s WeBank is developing this approach to run credit checks without removing consumers’ data from their devices. Similarly, Nvidia’s Clara allows hospitals to share diagnostic models trained on patient data without compromising the data itself.

- AI’s success so far has relied on big data, but uses in which large quantities of labeled data are hard to come by require special techniques. One solution to this small data problem is to synthesize training examples, such as faux MRIs that accurately portray rare diseases. Another is transfer learning, in which a model trained on an ample dataset is fine-tuned on a much smaller one.

- Businesses can have a tough time finding the right models for their needs, given the shortage of AI specialists and the variety of neural network architectures to choose from. One increasingly popular solution: AI tools that automate the design of neural networks, such as Google's AutoML.

- Demand for AI in smartphones, laptops, and the like is pushing consumer electronics companies toward higher-efficiency models. That helps explain Apple's purchase of edge-computing startup Xnor.ai in January.

We’re thinking: It’s great to see today's research findings find their way into tomorrow's commercial applications. The road from the AI lab to marketplace gets busier all the time.

Work With Andrew Ng

Machine Learning Engineer: Bearing AI, an AI Fund portfolio company, is looking for a talented machine learning engineer. You will be responsible for scalable systems including data pipelines, training, and analyzing performance in a production environment. Apply here

Product Marketing Lead: AI Fund is looking for a product marketing lead to help build AI solutions for healthcare, financial services, human capital, logistics, and more. You will be part of the investment team that assesses market needs, analyzes pain points, and solves problems. Apply here

Director of Marketing and Comms: deeplearning.ai, an education company dedicated to advancing the global community of AI talent, seeks a proven marketing generalist. You will be responsible for branding, content, and events that build and engage the deeplearning.ai community. Apply here

Chief Technology Officer: TalkTime, an AI Fund portfolio company, is looking for Chief Technology Officer. You will be responsible for creating the most effective way for customers and companies to interact, leveraging AI, natural-language processing, and a mobile-first interface. Apply here

Senior Full-Stack Engineer in Medellín, Colombia: Bearing AI is looking for an experienced full-stack engineer. This position is responsible for building out the API to support our web app as well as helping develop new AI-driven products. Contact our team directly at estephania@aifund.ai or apply here

Front-End Engineer in Medellín, Colombia: Bearing AI is looking for a talented engineer to take end-to-end ownership of our web app and collaborate closely with our designer and back-end engineering team. Contact our team directly at estephania@aifund.ai or apply here

Thoughts, suggestions, feedback? Please send to thebatch@deeplearning.ai. Subscribe here and add our address to your contacts list so our mailings don't end up in the spam folder. You can unsubscribe from this newsletter or update your preferences here.

Copyright 2020 deeplearning.ai, 195 Page Mill Road, Suite 115, Palo Alto, California 94306, United States. All rights reserved. |