|

Dear friends,

I think AI agent workflows will drive massive AI progress this year — perhaps even more than the next generation of foundation models. This is an important trend, and I urge everyone who works in AI to pay attention to it.

This iterative process is critical for most human writers to write good text. With AI, such an iterative workflow yields much better results than writing in a single pass.

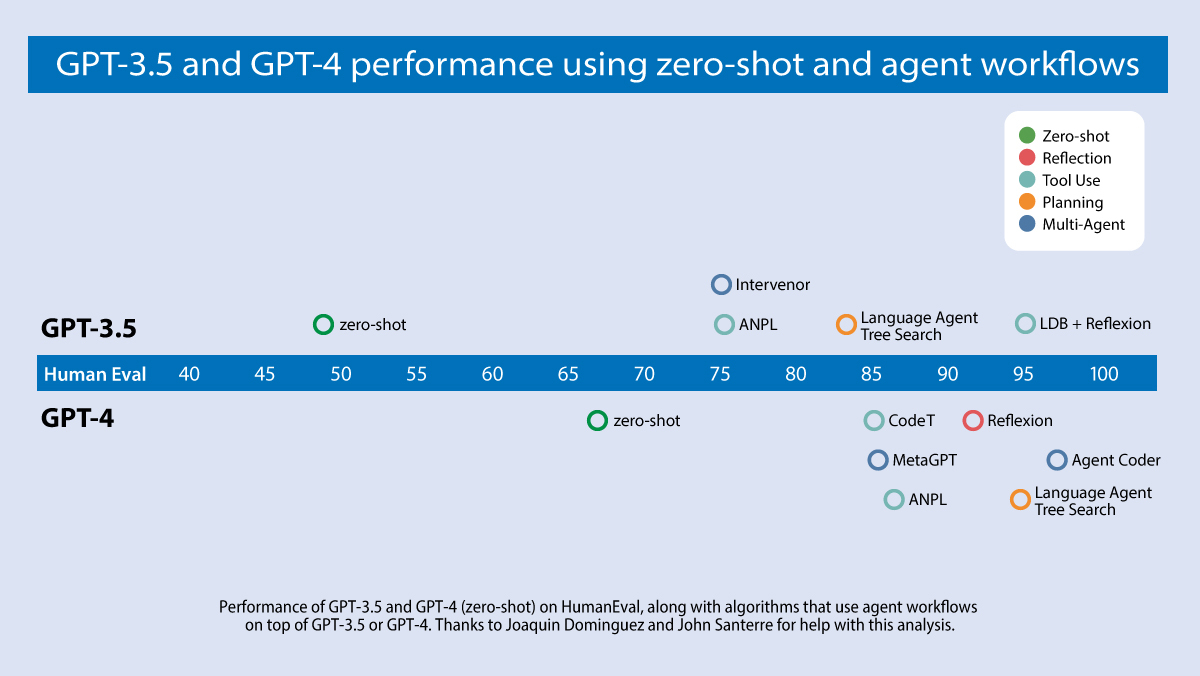

Devin’s splashy demo recently received a lot of social media buzz. My team has been closely following the evolution of AI that writes code. We analyzed results from a number of research teams, focusing on an algorithm’s ability to do well on the widely used HumanEval coding benchmark. You can see our findings in the diagram below.

GPT-3.5 (zero shot) was 48.1% correct. GPT-4 (zero shot) does better at 67.0%. However, the improvement from GPT-3.5 to GPT-4 is dwarfed by incorporating an iterative agent workflow. Indeed, wrapped in an agent loop, GPT-3.5 achieves up to 95.1%.

Next week, I’ll elaborate on these design patterns and offer suggested readings for each.

Keep learning! Andrew

P.S. Build an optimized large language model (LLM) inference system from the ground up in our new short course “Efficiently Serving LLMs,” taught by Predibase CTO Travis Addair.

News

Conversational RobotsRobots equipped with large language models are asking their human overseers for help. What's new: Andrew Sohn and colleagues at Covariant launched RFM-1, a model that enables robots to respond to tinstructions, answer questions about what they see, and request further instructions. The model is available to Covariant customers. How it works: RFM-1 is a transformer that comprises 8 billion parameters. The team started with a pretrained large language model and further trained it, given text, images, videos, robot actions, robot sensor readings, to predict the next token of any of those types. Images and videos are limited to 512x512 pixels and 5 frames per second.

Behind the news: Covariant’s announcement follows a wave of robotics research in recent years that enables robots to take action in response to text instructions. Why it matters: Giving robots the ability to respond to natural language input not only makes them easier to control, it also enables them to interact with humans in new ways that are surprising and useful. In addition, operators can change how the robots work by issuing text instructions rather than programming new actions from scratch. We're thinking: Many people fear that robots will make humans obsolete. Without downplaying such worries, Covariant’s conversational robot illustrates one way in which robots can work alongside humans without replacing them.

Some Models Pose Security RiskSecurity researchers sounded the alarm about holes in Hugging Face’s platform.

Malicious mimicry: Separately, HiddenLayer, a security startup, demonstrated a way to compromise Safetensors, an alternative to Pickle that stores data arrays more securely. The researchers built a malicious PyTorch model that enabled them to mimic the Safetensors conversion bot. In this way, an attacker could send pull requests to any model that gives security clearance to the Safetensors bot, making it possible to execute arbitrary code; view all repositories, model weights, and other data; and replace users’ models. Behind the News: Hugging Face implements a variety of security measures. In most cases, it flags potential issues but does not remove the model from the site; users download at their own risk. Typically, security issues on the site arise when users inadvertently make their own information available. For instance, in December 2023, Lasso Security discovered available API tokens that afforded access to over 600 accounts belonging to organizations like Google, Meta, and Microsoft. Why it matters: As the AI community grows, AI developers and users become more attractive targets for malicious attacks. Security teams have discovered vulnerabilities in popular platforms, obscure models, and essential modules like Safetensors. We’re thinking: Security is a top priority whenever private data is concerned, but the time is fast approaching when AI platforms, developers, and users must harden their models, as well as their data, against attacks.

NEW FROM DEEPLEARNING.AIIn our new short course “Efficiently Serving Large Language Models,” you’ll pop the hood on large language model inference servers. Learn how to increase the performance and efficiency of your LLM-powered applications! Enroll today

Deepfakes Become Politics as UsualSynthetic depictions of politicians are taking center stage as the world’s biggest democratic election kicks off. What’s new: India’s political parties have embraced AI-generated campaign messages ahead of the country’s parliamentary elections, which will take place in April and May, Al Jazeera reported. How it works: Prime Minister Narendra Modi, head of the ruling Bharatiya Janata Party (BJP), helped pioneer the use of AI in campaign videos in 2020. They’ve become common in recent state elections.

Meanwhile, in Pakistan: Neighboring Pakistan was deluged with deepfakes in the run-up to its early-February election. Former prime minister Imran Khan, who has been imprisoned on controversial charges since last year, communicated with followers via a clearly marked AI-generated likeness. However, he found himself victimized by deepfakery when an AI-generated likeness of him, source unknown, urged his followers to boycott the polls. Behind the news: Deepfakes have proliferated in India in the absence of comprehensive laws or regulations that govern them. Instead of regulating them directly, government officials have pressured social media operators like Google and Meta to moderate them. What they’re saying: “Manipulating voters by AI is not being considered a sin by any party,” an anonymous Indian political consultant told Al Jazeera. “It is just a part of the campaign strategy.” Why it matters: Political deepfakes are quickly becoming a global phenomenon. Parties from Argentina, the United States, and New Zealand have distributed AI-generated imagery or video. But the sheer scale of India’s national election — in which more than 900 million people are eligible to vote — has made it an active laboratory for synthetic political messages. We’re thinking: Synthetic media has legitimate political uses, especially in a highly multilingual country like India, where it can enable politicians to communicate with the public in a variety of languages and dialects. But unscrupulous parties can also use it to sow misinformation and undermine trust in politicians and media. Regulations are needed to place guardrails around deepfakes in politics. Requiring identification of generated campaign messages would be a good start.

Cutting the Cost of Pretrained ModelsResearch aims to help users select large language models that minimize expenses while maintaining quality. What's new: Lingjiao Chen, Matei Zaharia, and James Zou at Stanford proposed FrugalGPT, a cost-saving method that calls pretrained large language models (LLMs) sequentially, from least to most expensive, and stops when one provides a satisfactory answer. Key insight: In many applications, a less-expensive LLM can produce satisfactory output most of the time. However, a more-expensive LLM may produce satisfactory output more consistently. Thus, using multiple models selectively can save substantially on processing costs. If we arrange LLMs from least to most expensive, we can start with the least expensive one. A separate model can evaluate its output, and if it’s unsatisfactory, another algorithm can automatically call a more expensive LLM, and so on. How it works: The authors used a suite of 12 commercial LLMs, a model that evaluated their output, and an algorithm that selected and ordered them. At the time, the LLMs’ costs (which are subject to change) spanned two orders of magnitude: GPT-4 cost $30/$60 per 1 million tokens of input/output, while GPT-J hosted by Textsynth cost $0.20/$5 per 10 million tokens of input/output.

Results: For each of the three datasets, the authors found the accuracy of each LLM. Then they found the cost for FrugalGPT to match that accuracy. Relative to the most accurate LLM, FrugalGPT saved 98.3 percent, 73.3 percent, and 59.2 percent, respectively. Why it matters: Many teams choose a single model to balance cost and quality (and perhaps speed). This approach offers a way to save money without sacrificing performance. We're thinking: Not all queries require a GPT-4-class model. Now we can pick the right model for the right prompt.

Work With Andrew Ng

Join the teams that are bringing AI to the world! Check out job openings at DeepLearning.AI, AI Fund, and Landing AI.

Subscribe and view previous issues here.

Thoughts, suggestions, feedback? Please send to thebatch@deeplearning.ai. Avoid our newsletter ending up in your spam folder by adding our email address to your contacts list.

|

.png?upscale=true&width=1200&upscale=true&name=The%20Batch%20top%20banners%20(10).png)