Dear friends,

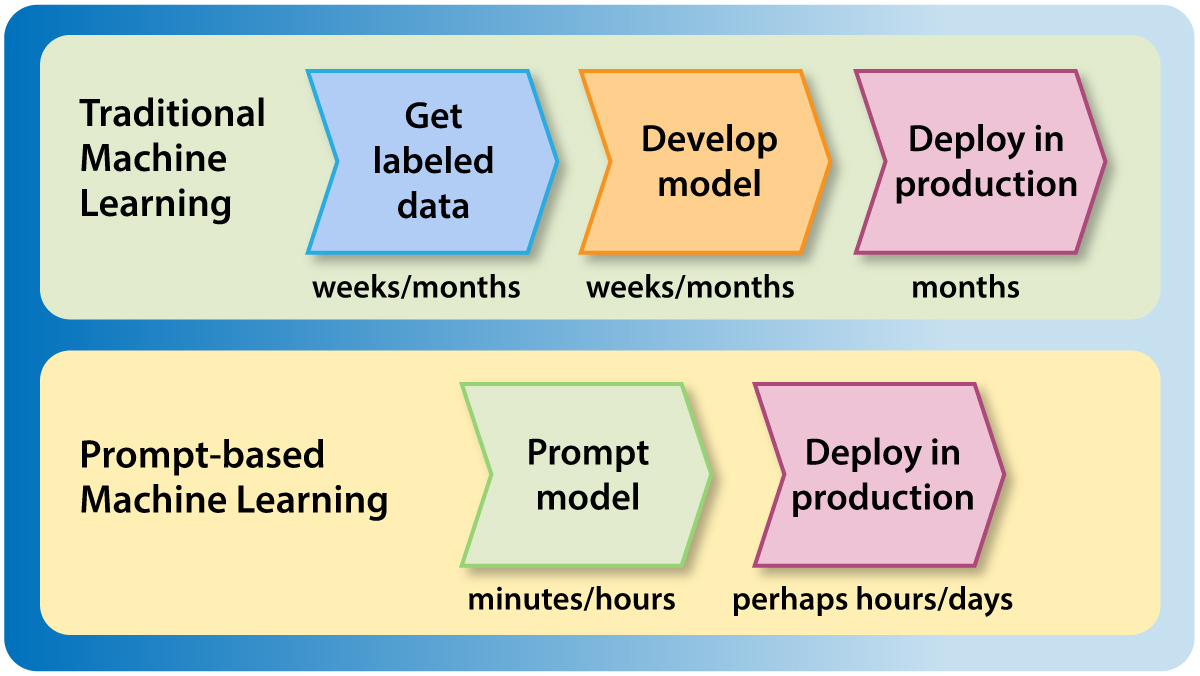

A few weeks ago, I wrote about my team at Landing AI’s work on visual prompting. With the speed of building machine learning applications through text prompting and visual prompting, I’m seeing a trend toward building and deploying models without using a test set. This is part of an important trend of speeding up getting models into production.

The test set has always been a sacred aspect of machine learning development. In academic machine learning work, test sets are the cornerstone of algorithm benchmarking and publishing scientific conclusions. Test sets are also used in commercial machine learning applications to measure and improve performance and to ensure accuracy before and after deployment.

But thanks to prompt-based development, in which you can build a model simply by providing a text prompt (such as “classify the following text as having either a positive or negative sentiment”) or a visual prompt (by labeling a handful of pixels to show the model what object you want to classify), it is possible to build a decent machine learning model with very few examples (few-shot learning) or no examples at all (zero-shot learning).

Previously, if we needed 10,000 labeled training examples, then the additional cost of collecting 1,000 test examples didn’t seem onerous. But the rise of zero-shot and few-shot learning — driven by prompt-based development — is making test set collection a bottleneck.

Thus I'm seeing more and more teams use a process for development and deployment that looks like this:

I’m excited by this process, which significantly shortens the time it takes to build and deploy machine learning models. However, there is one important caveat: In certain applications, a test set is important for managing risk of harm. Many deployments don’t pose a significant risk of harm; for example, a visual inspection system in a smartphone factory that initially shadows a human inspector and whose outputs aren’t used directly yet. But if we're developing a system that will be involved in decisions about healthcare, criminal justice, finance, insurance, and so on, where inaccurate outputs or bias could cause significant harm, then it remains important to collect a rigorous test set and deeply validate the model’s performance before allowing it to make consequential decisions.

The occurrence of concept drift and data drift can make the very notion of a “test set” problematic in practical applications, because the data saved for testing no longer matches the real distribution of input data. For this reason, the best test data is production data. For applications where it’s safe and reasonable to deploy without using a test set, I’m excited about how this can speed up development and deployment of machine learning applications.

Keep learning! Andrew

News

Google Adds AI Inside and OutGoogle showcased a flood of new features in its latest bid to get ahead in the generative AI arms race. What’s new: The company demonstrated AI features for consumers and developers at its annual I/O conference. PaLM powered: More than two dozen of the new features, including Bard and Duet AI (see below), are powered by a new large language model called PaLM 2. Google trained PaLM 2 on tasks similar to Google's UL2 pretraining framework more than 100 different natural languages and numerous programming languages. It will be available as a cloud service in four unspecified sizes.

App assistance: Duet AI is a suite of text generation tools for Google Workspace and Cloud.

New foundation models: Vertex offers three new foundation models. Chirp for speech-to-text, Codey for code completion, and Imagen for text-to-image generation. Users can join a waitlist via Vertex. Bard handles images: Users no longer have to join a waitlist for access to the Bard chatbot, and its language capabilities have been expanded from English to include Japanese and Korean. It is now available in 180 countries, though not the EU or Canada. Bard can now respond to image-based queries, provide images in its responses, and generate custom images using Adobe’s image generation model, Firefly. Search enhancements: An experimental version of Google Search will generate text answers to queries using an unidentified language model.

Why it matters: Google’s new capabilities are the latest salvo in an ongoing competition to capture generative AI’s market potential to greatest effect. We’re thinking: Just days ago, a leaked Google memo talked about Google and OpenAI’s lack of moat when it comes to LLM technology. It described how open source offerings of LLMs are racing ahead, making it challenging for any company to maintain a significant and enduring lead over competitors in the quality of its models. We think the impressive I/O presentation by Sundar Pichai and team, however, reminded everyone of Google’s tremendous distribution advantages. Google owns many platforms/products (such as search, Gmail, Android, Chrome and Youtube) with over 2 billion users, and this gives it numerous ways to get generative AI to users. In the era of generative AI, we are increasingly seeing distribution as a moat for businesses.

The Politics of Language ModelsDo language models have their own opinions about politically charged issues? Yes — and they probably don’t match yours. What's new: Shibani Santurkar and colleagues at Stanford compared opinion-poll responses of large language models with those of various human groups. How it works: The authors collected multiple-choice questions based on surveys of public opinion in the United States. They compared answers generated by nine language models (three from AI21 Labs and six from OpenAI) with those of 60 demographic groups. The groups varied according to sex, age, race, geography, relationship status, citizenship status, education, political party affiliation, religious affiliation, and degree of religious observance.

Results: The authors compared the distributions of model and human answers according to a formula based on the Wasserstein score, also known as earth mover’s distance. In their formula, 1 is a perfect match.

Behind the news: In some circles, ChatGPT has been criticized for expressing a political bias toward liberal (in U.S. terms) positions. Such allegations have prompted developers to build alternative versions that are deliberately biased in other directions. Some observers speculate that Elon Musk’s secretive AI startup is on a similar mission. Why it matters: Large language models aren’t neutral reflections of society. They express political views that don’t match those of the general population or those of any group. Furthermore, prompting them to take on a particular group’s viewpoint doesn't bring them into line with that group. The AI community (and the world at large) must decide whether and how to manage these biases. We're thinking: Should a language model’s opinions match those of the global average, or should different language models respond similarly to different groups? Given that a subset of the world’s population holds biased opinions, including sexist or racist views, should we build LLMs that reflect them? Should language models be allowed to express opinions at all? Much work lies ahead to make these choices and figure out how to implement them.

A MESSAGE FROM WORKERAIdentify your organization's generative AI capabilities, skill gaps, and training needs with the world's first generative AI skill assessment, from Workera. Join the beta now!

Automated Into a JobChatGPT is helping some workers secretly hold multiple full-time jobs at once. What’s new: Workers are using OpenAI’s chatbot to boost their productivity so they can earn separate paychecks from a number of employers, each of whom believes they are exclusive employees, Vice reported.

Behind the news: A March 2023 paper by two MIT economists reported that writers who used ChatGPT were 37 percent faster than those who did not. Why it matters: This practice illustrates the real productivity gains conferred by large language models. Moreover, in a typical corporate environment, managers decide which tools workers will use and how. The “overemployed” community turns that practice on its head, using AI to boost productivity from the bottom up. We’re thinking: It's discouraging to see people using AI to deceive employers who could benefit from the productivity gains. Beyond the ethical problems, the use of generative AI without informing employers could lead to legal questions in areas like ownership of intellectual property. Yes, let’s use these tools to be more productive, but let’s do it in honest and ethical ways.

Text-to-3D Without 3D Training DataResearchers struggle to build models that can generate a three-dimensional scene from a text prompt largely because they lack sufficient paired text-3D training examples. A new approach works without any 3D data whatsoever. What's new: Ben Poole and colleagues at Google and UC Berkeley built DreamFusion to produce 3D scenes from text prompts. Rather than training on text-3D pairs, the authors used a pretrained text-to-image diffusion model to guide the training of a separate model that learned to represent a 3D scene. Key insight: A neural radiance field (NeRF) learns to represent a 3D scene from 2D images of that scene. Is it possible to replace the 2D images with a text prompt? Not directly, but a pretrained text-to-image diffusion model, which generates images by starting with noise and removing the noise in several steps, can take a text prompt and generate 2D images for NeRF to learn from. The NeRF image (with added noise) conditions the diffusion model, and the diffusion model’s output provides ground truth for the NeRF. How it works: NeRF generated a 2D image, and the authors added noise. Given the noisy NeRF image and a text prompt, a 64x64 pixel version of Google's Imagen text-to-image diffusion model removed the noise to produce a picture that reflected the prompt. By repeating these steps, NeRF gradually narrowed the difference between its output and Imagen’s.

Results: The authors compared DreamFusion images to 2D renders of output from CLIP-Mesh, which deforms a 3D mesh to fit a text description. They evaluated the systems according to CLIP R-Precision, a metric that measures the similarity between an image and a text description. For each system, they compared the percentage of images that were more similar to the prompt than to 153 other text descriptions. DreamFusion achieved 77.5 percent while CLIP-Mesh achieved 75.8 percent. (The authors note that DreamFusion’s advantage is all the more impressive considering an overlap between the test procedure and CLIP-Mesh’s training). Why it matters: While text-3D data is rare, text-image data is plentiful. This enabled the authors to devise a clever twist on supervised learning: To train NeRF to transform text into 3D, they used Imagen’s text-to-image output as a supervisory signal. We're thinking: This work joins several demonstrations of the varied uses of pre-trained diffusion models.

Work With Andrew Ng

AI/ML Researcher/Engineer: Esteam seeks an artificial intelligence and machine learning engineer responsible for end-to-end ownership and scaling of its natural language processing, voice recognition, generative, and large language models. The ideal candidate has a clear understanding of deep learning, graphical models, reinforcement learning, computer perception, natural language processing, and data representation. Apply here

Customer Success Engineer: Landing AI seeks an engineer to build the foundation of the customer success function and handle account management inquiries, renewals, implementation/services, technical support, customer education, and operations. The ideal candidate is customer-oriented and experienced in the artificial intelligence/machine learning industry. Apply here

Curriculum Product Manager: DeepLearning.AI seeks a curriculum product manager to create high-quality educational content with our network of AI experts. The ideal candidate has experience as an educator, technical AI skills, and a strong sense of teamwork. Apply here

DevOps Engineer: DeepLearning.AI seeks an engineer with strong computer science fundamentals and a passion for improving learners' experiences. The ideal candidate will thrive in an early development stage of a leading educational environment focusing on AI-related topics. The role is responsible for designing, implementing, and maintaining the infrastructure that supports our software development and deployment process. Apply here

Frontend Engineer: DeepLearning.AI seeks an engineer with strong computer science fundamentals to develop our educational products. The role is responsible for building and delivering high-quality experiences for the technical content we are providing. You will work alongside a team of talented content creators and outside partners to build various layers of the infrastructure of world-renowned AI-driven education. Apply here

Subscribe and view previous issues here.

Thoughts, suggestions, feedback? Please send to thebatch@deeplearning.ai. Avoid our newsletter ending up in your spam folder by adding our email address to your contacts list.

|

.png?upscale=true&width=1200&upscale=true&name=January%2025%2c%202023%20(3).png)

.gif?upscale=true&width=1200&upscale=true&name=unnamed%20(65).gif)

.jpg?upscale=true&width=1200&upscale=true&name=The%20Batch%20ads%20and%20exclusive%20banners%20(12).jpg)